Introduction

Every day, Wayfair receives tens of thousands of messages from customers, telling us about their experiences with our products and services through the likes of product reviews, return comments, and various surveys. Considering that our customers spend valuable time writing these, it is fair to assume that they have something worthwhile to tell us. In this blog post we will look at how we extract information from the text in these customer messages using Natural Language Processing (NLP), specifically Google’s BERT model.

Motivation

Some of the information that our customers supply is fairly easy to interpret in an automatic manner. Star ratings, for example (Fig. 1), capture overall customer sentiment directly in numerical form. Information contained in text, on the other hand, is more nuanced. This complexity makes it more difficult—but more valuable—to extract this information and translate it into structured and quantifiable insights. There is a long list of potential applications for what to do with such information. For example, it would allow us to:

- Find products whose pictures on our website have reportedly poor color reproduction (see Fig. 1 for an example), and improve imagery to better match the product.

- Find products with inaccurate descriptions on the website (e.g. “stand is 10’’ wide, not 12’’ like it says on the website”) and correct these mistakes.

- Identify poor delivery experiences (“They refused to carry the package up the stairs, even though I paid for full service”) to detect trends that may require action.

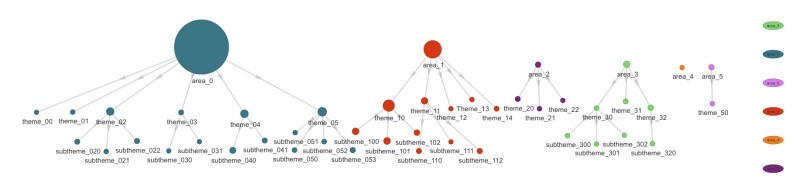

Prior to our implementation of BERT, much of our user-feedback insight came from humans reading customer messages, and classifying them into a number of categories. This practice was inefficient for a number of reasons. First, it is time-consuming and thus expensive, limiting the possibilities with human annotation. To keep annotation time per text sample short, the coding schemes (“taxonomies”) used before this project were rather simple, and not all user feedback was processed in this way. This limited possible insights. Additionally, various groups within Wayfair used problem-specific taxonomies, limiting cross-team synergies. (See Fig. 2, top).

Improving Our Approach

To improve on this status quo, we set up a taxonomy that is general enough to be applicable to a large swath of user feedback, and we implemented a machine learning model that can produce the corresponding annotation automatically. This resolved our most pressing problem with human annotation: it will easily scale to a high number of texts that can be processed quickly and with low cost. We also used this opportunity to enable team cooperation by designing a new taxonomy that can be used in different contexts.

Setting up a single taxonomy that is useful to the majority of business functions while still being amenable to machine learning algorithms was a challenge in its own right — but that would be a discussion more suited for a sociology blog than a tech one. So here we are going to focus on the machine learning aspect. Suffice it to say that the resulting scheme is rather complex; this scheme tags texts along three dimensions, the most prominent of which has a tree structure to capture different levels of detail that may appear in user feedback. The taxonomy comprises in total more than 80 flags, which can occur in more than 4000 combinations.

With such a complex task, it is rather risky to replace the human annotation workflow in one fell swoop with our NLP model. To check that the model is performing as expected, there is continuous quality control with a human-in-the-loop process (see Fig. 2, bottom). In addition to quality control, this process generates additional training data focused on texts where the model produced results of high uncertainty, allowing us to improve the automatic annotation over time. Even with this setup, the manpower requirements are substantially reduced and can likely be reduced further in the near future.

NLP Refresher

Labeling texts is not a new problem, and there is a long list of tools out there that one can use for such a task. Here’s a brief refresher on the most common techniques and a more detailed description of the one we ended up using.

For language processing it is necessary to transform words from strings of letters into representations more amenable to computer processing. To do this, we represent each word as a vector. A number of schemes to embed words into a vector space have been developed and are available as easy-to-use packages (e.g. word2vec, fastText, GloVe). These are built on the assumption that semantically similar words should be represented by vectors that are close to each other in vector space. More technically, this is implemented as a look-up table with a vector for each word, which is optimized on synthetic tasks related to co-occurrence, e.g. given the embedding of a word, predicting words in its context.

However, such embeddings are unique for each word and cannot represent contextual differences. For example, the vector for “leg” needs to represent a chicken leg just as much as a segment of a multiple-flight journey. Considering the complex taxonomy we have in mind, static word embeddings are too simplistic for our use case. Thus it is helpful to look at the sequence of words in whole sentences or paragraphs to extract meaning from text. A common method to achieve this is by sequentially feeding static word vectors into a recurrent neural network (RNN) and using the hidden state of the RNN as a representation of the meaning of the whole text. Unfortunately, this can run into trouble, as the words in a text can have very long-ranging relationships, which are hard for RNNs to learn even when using long-term-short-term-memory cells (LSTM).

More recently, there have been significant advances in overcoming these issues with models based on so called “transformer” architectures. These transformers look like they might power a breakthrough for NLP as significant as the effect of the introduction of pre-trained convolutional networks for image-related tasks. We use one of these transformer models (specifically, Google’s BERT model), to tackle our customer feedback.

An Introduction to BERT

Below, we will give a brief introduction to the BERT model. To learn more about the model, check out Google’s full paper [1]. If you are already familiar with BERT, feel free to skip this section.

The basic concept behind BERT is to transform each word vector over several stages using the vector of the word itself and vectors of all other words in a sentence as inputs. In addition, at the beginning of the transformation chain, a position vector is added to each word vector. This vector does not depend on the word, but only on the position in the text (See Fig.3), enabling the representation of the relations between words.

Each of the transformation stages implements an attention mechanism. For each of the input vectors we compute a key, a query, and a value representation using simple matrix multiplication with matrices learned in training. We then check the amount of attention the word-to-be-transformed should pay to each word (including itself) by building the scaled dot product of the query vector of the word in question with the key vectors of the other words. The transformed representation is then based on the weighted sum of value vectors of all words, where the weights are the softmax-normalized dot products (Fig. 4).

As only one softmax layer is evaluated, attention is paid predominantly to one individual word at a time, so a single attention mechanism (called “head‘) is largely limited to a single type of semantic or grammatical relationship. To overcome this, BERT uses multiple attention heads, concatenating the output of each. Concatenating representations of the same length as the original would quickly grow the length of the word vectors to unfeasible sizes, so each of the attention heads operates on vectors only a fraction of the width of the base word embedding, which are then concatenated to the original length. This concatenated output is fed through a simple single-layer feed-forward network. Between each of these two steps, Resnet-like Add&Norm operations are inserted to improve training convergence. (See Fig. 5 for a graphical representation of this architecture).

All of this sounds rather complex, and to some extent it is: even the small version of the pretrained model published by Google has more than one hundred million free parameters to learn. This obviously requires a huge amount of training data, and Google exploits the fact that even without explicit labeling, it is possible to train some NLP tasks by recognizing that there are large amounts of text written by humans. For BERT explicitly, two synthetic tasks are used:

- “Masking”: replacing a word in the input text with a placeholder and trying to guess the word.

- “Next sentence prediction ”: taking two sentences, either consecutive or randomly chosen, and trying to guess if these belong together.

This training procedure produces a generic model, which is well trained to represent the meaning of a large variety of text. Implementing this model helped us to achieve much of what was required to build our system for automatic annotation. On top of that, we only needed to add a simple classification layer on top of the transformer, and to train the whole model on a relatively modest amount of text that has been labeled by humans according to our taxonomy.

Making BERT Work for Wayfair

Having the pretrained BERT model available saved us a great deal of effort, but our project didn’t perfectly fit into the framework provided by Google. To get the most out of our customers texts, we decided to modify BERT and the surrounding software as follows.

- We enabled multi-label classification. Out-of-the-box BERT will only do single-label classification tasks. As we wanted to analyze texts in which customers are for example discussing the product as well as the delivery experience, we needed multi-label classification. We implemented this by modifying the loss function, replacing the softmax layer with a sigmoid, and using the result in a cross-entropy loss.

- We played some weighting games in order to improve results in view of rather large class imbalance, which we mitigated by trading recall vs. precision for each label separately in the loss function.

- We devised a scheme that would flatten the tree-structured taxonomy in preprocessing into a more ML-friendly representation and reassemble it in post processing. We did this by encoding each annotation as a multi-hot-encoded vector and then decoding it back into a hierarchical structure by exploiting some of our tree’s properties.

- We implemented the logic to evaluate the probabilities for each label in order to send text with low-confidence annotation to the human annotators.

We set up the production system to be usable with maximum flexibility: we deployed two microservices; one RESTful Flask service that takes client requests and does pre- and post-processing, as well as a Tensorflow Serving service that serves the actual model predictions and is called by the Flask service between pre- and post-processing. Both services run in production on a Kubernetes cluster, which offer us handy features like load-dependent autoscaling. Client-side, the service is used to fill our databases with the model’s annotations and to organize the human-in-the-loop process with a custom quality-assurance tool. Shout out to our engineering partners who made all of this possible!

Our Results

We are now running the classification live on incoming text data, which has many advantages over our previous workflow:

- Savings: We save substantial amounts of money, because computers are inexpensive compared to a dedicated workforce analyzing text data.

- Consistency: Our results are more consistent, as every text gets coded to a common standard.

- Rigor: We obtain sharper insights because the model allows for a more complex taxonomy than humans could annotate at scale.

- Speed: The BERT-based annotation model is tightly integrated into the data flow, so that insights are available almost immediately, compared to a longer turn-around time for human annotation. Even though the model is very complex, inference speed is fast enough to allow coding several comments per second on CPU and about a hundred comments per second on GPU.

Conclusion

BERT does serious business for us: the model is providing valuable insights, and helps us improve the customer experience. But we are always looking to further improve our NLP tools, and are exploring projects in the following areas:

- Downstream tooling: Although the number of classes we use might look large, there are still many nuances in our customers’ opinions that BERT cannot catch. We purposefully designed our taxonomy to be broadly applicable, so we know we are missing things commonly commented on for specific types of products (for example, the pile of a carpet). We are currently looking into feeding sections of data filtered by the classification results into unsupervised topic models to get a more detailed picture.

- Introducing a multilingual model: While most of our business comes from English-speaking markets, significant fractions of our customer feedback is in French and German. Using a unified multilingual model for these languages has the opportunity to leverage our large English-language datasets to improve classification also for these languages.

- Reducing training data requirements: We are looking into semi-supervised approaches to reduce the number of hand-annotated samples required for training when extending to further data sources.

With these ideas, we hope to completely accompany our customers journey through the Wayfair experience and make it ever more enjoyable. Stay tuned to see developments on these projects on the blog!

Special Thanks

Google BERT team, Robert Koschig, Cristina Mastrodomenico (+team), Christian Rehm, Jonathan Wang

References

[1] J. Devlin et al. “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding”, arXiv:1810.04805